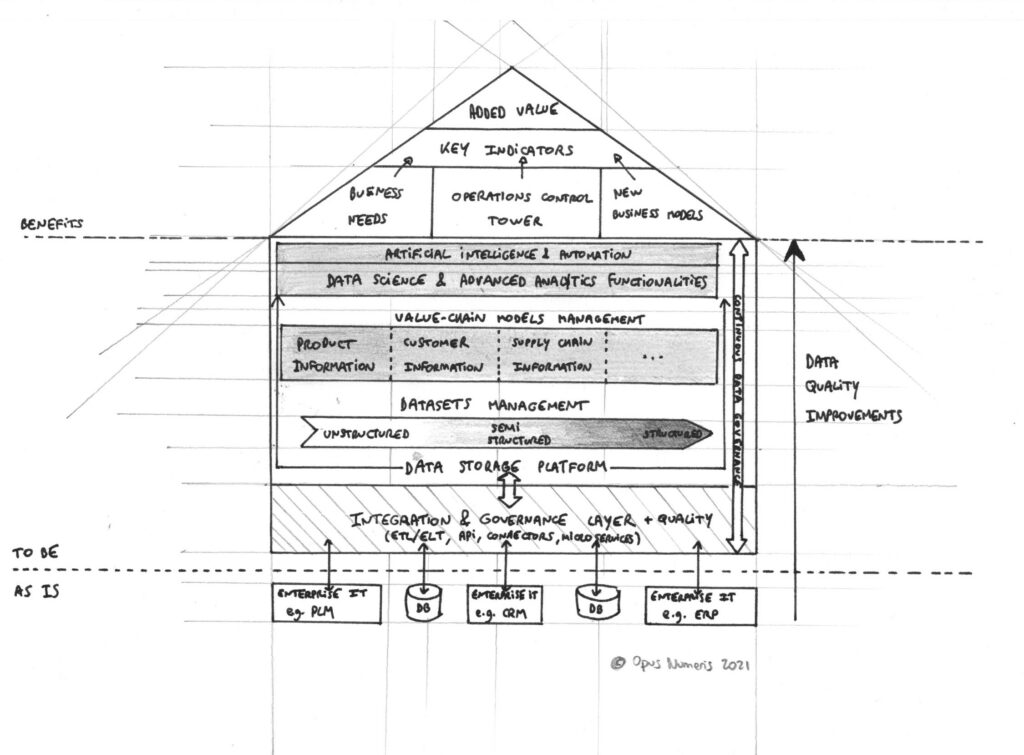

Since the 1970s and the third phase of the industrial revolution, companies have focused their efforts on structuring their processes and related data (in this order) adopting largely standardized enterprise software (ERP, MES, CRM, PLM, etc.). It has allowed them to rationalize business processes, streamline activities, structure key transactional data for operations and attain some efficiency benefits. However, because very few organizations can operate all their activities on a unique enterprise software, they still experience challenges on integrating the different enterprise software bricks they use. This point-to-point data model integration strategy is complex because some data models change overtime (to deal with new norms or regulations, to adapt to new products or business models), making very difficult the establishment of a complete, seamless digital continuum within an organization (the intelligent connection of all data sources allowing data flows between them). For instance, the integration of PLM with ERP or PLM with MES are very challenging and depend on the types of software used on both ends. As we know, IT architecture evolves in organizations, and integration efforts between existing bricks may have to be rethought when a new software is implemented, or when a different data model is adopted (think about a SAP ECC to S/4 Hana transition for instance). Since processes and technical constraints are today at the center of the effort rather than data, large transformation are complex and may end up providing very few benefits for business data consumers, who are looking to cross-analyze data coming from different sources to produce new insights.

We offer a different, complementary approach, which aim is to set-up a dedicated product supporting the construction and formalization of a proper enterprise data backbone, which encompasses wider standards (bridging industry norms, legacy data models, future standards of the extended enterprise, ontologies…). Our approach focuses on data and its lifecycle rather than processes, and we emphasize data usage benefits and valorization. Once key enterprise software have been identified, we focus on building the data backbone (unified data model and policies hosted in a data platform) along with the intelligent integration layers (technical integration vehicle + data governance and quality rules) in order to feed the platform.

The product value chain explorer is a “data backbone” accelerator that focuses on Product Data and the underlying Product Value Chain. On top of a unified data model that establishes the structural connections between PLM and ERP data models (and possibly others), we built the data reporting and analysis capabilities that allows any business user to consume data, whether it is through reports or queries using the existing dataset, advanced analytics, or even create additional data through data science. The data platform also allows the management of semi-structured as well as completely unstructured data that could then be paired or cross-analyzed with this unified model. Our accelerator provides a concrete, actionable answer to business challenges (lack of data-driven control of the entire value chain, difficulties to meet regulatory requirements on transparency) but could also prove a more efficient way to drive data normalizing from usages. We even believe it could boost your innovation by bringing a new, different perspective on your data.